Host > CHT Core > Migration Guides

Guides for migrating CHT applications

What to do when CHT upgrades don't work as planned

There’s a concept of upgrades “getting stuck” which mainly means that after many many hours an upgrade is not making any progress. Most likely, this will manifest as the progress bars in the upgrade admin web UI not increasing and “sticking” at a certain percentage. An alternate possibility is that the progress bars disappear altogether.

When troubleshooting, consider making sure that:

A safe fix for any upgrade getting stuck is to restart all services. Any views that were being re-indexed will be picked up where they left off without losing any work. This should be your first step when trouble shooting a stuck upgrade.

If you’re able to, after a restart go back into the admin web GUI and try to upgrade again. Consider trying this at least twice.

Issue #10296: Requests will fail due to fetch implementation if headers are not sent within 5 minutes

API error:

TypeError: fetch failed

at node:internal/deps/undici/undici:15445:13

at async /home/diana/.config/JetBrains/WebStorm2025.2/scratches/fetchtimeout.js:2:13 {

[cause]: HeadersTimeoutError: Headers Timeout Error

at FastTimer.onParserTimeout [as _onTimeout] (node:internal/deps/undici/undici:6821:32)

at Timeout.onTick [as _onTimeout] (node:internal/deps/undici/undici:528:17)

at listOnTimeout (node:internal/timers:608:17)

at process.processTimers (node:internal/timers:543:7) {

code: 'UND_ERR_HEADERS_TIMEOUT'

}Fix:

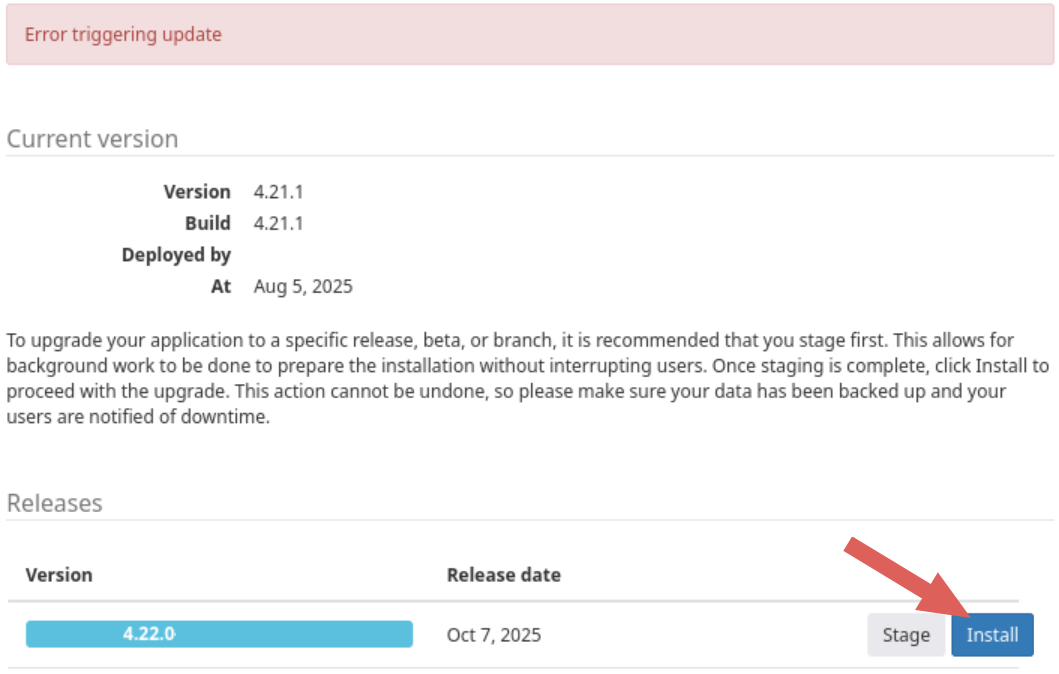

Admin web GUI showing ‘Error triggering update’ message at the top, and an arrow pointing to ‘Install’ button as the next step

Issue #9286: Starting an upgrade that involves view indexing can cause CouchDB to crash on large databases (>30m docs). The upgrade will fail and you will see the logs below when you have this issue.

HAProxy:

<150>Jul 26 20:57:39 haproxy[12]: 172.22.0.4,<NOSRV>,503,0,0,0,GET,/,-,medic,'-',217,-1,-,'-'

<150>Jul 26 20:57:40 haproxy[12]: 172.22.0.4,<NOSRV>,503,0,0,0,GET,/,-,medic,'-',217,-1,-,'-'

<150>Jul 26 20:57:41 haproxy[12]: 172.22.0.4,<NOSRV>,503,0,0,0,GET,/,-,medic,'-',217,-1,-,'-'CouchDB

[notice] 2024-07-26T20:52:45.229027Z couchdb@127.0.0.1 <0.10998.4> dca1387a05 haproxy:5984 172.22.0.4 medic GET /_active_tasks 200 ok 1

[notice] 2024-07-26T20:52:45.234397Z couchdb@127.0.0.1 <0.10986.4> 2715cd1e47 haproxy:5984 172.22.0.4 medic GET /medic-logs/_all_docs?descending=true&include_docs=true&startkey=%22upgrade_log%3A1722027165223%3A%22&limit=1 200 ok 6

[notice] 2024-07-26T20:52:45.468469Z couchdb@127.0.0.1 <0.11029.4> 3bf85c6071 haproxy:5984 172.22.0.4 medic GET /_active_tasks 200 ok 1Fix:

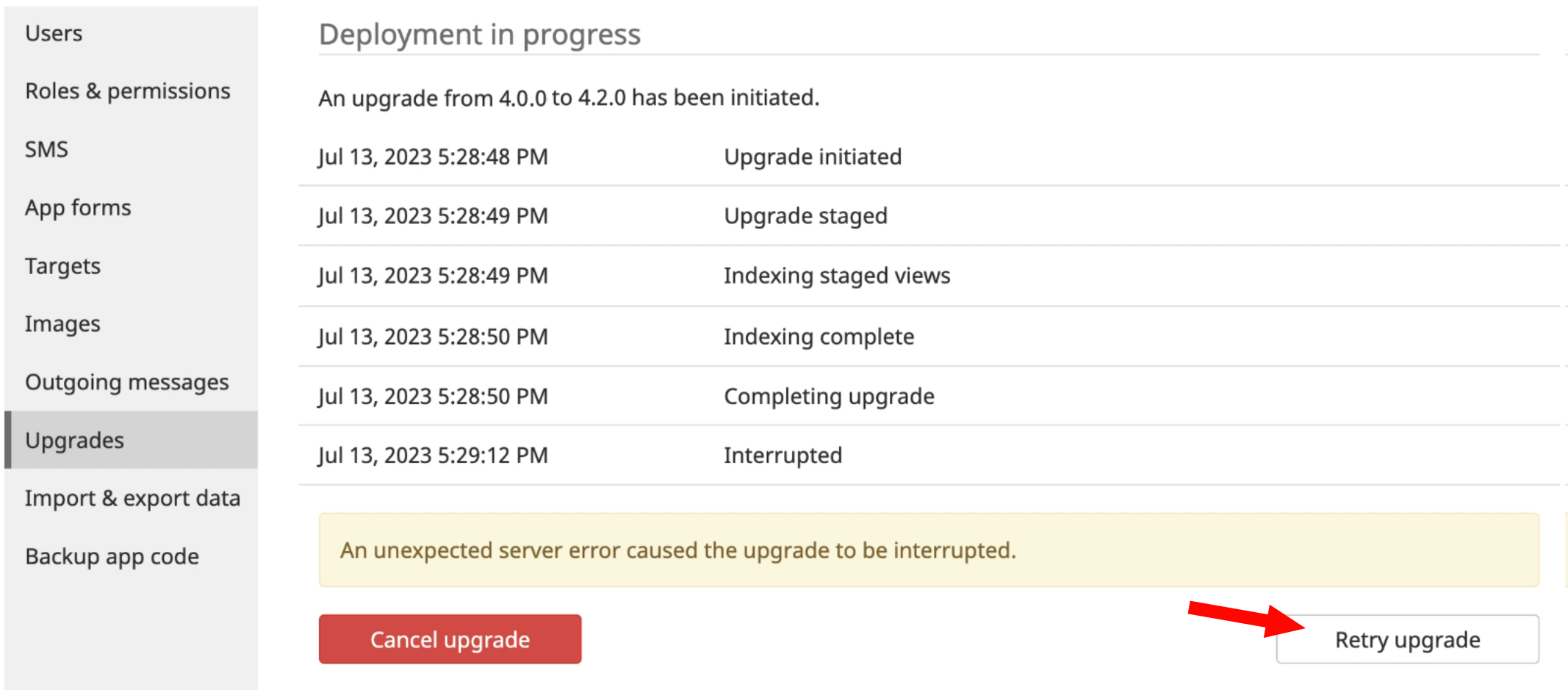

Admin web GUI showing Retry upgrade button in the lower right

Issue #9617: Starting an upgrade that involves view indexing can become stuck after indexing is finished

Upgrade process stalls while trying to index staged views:

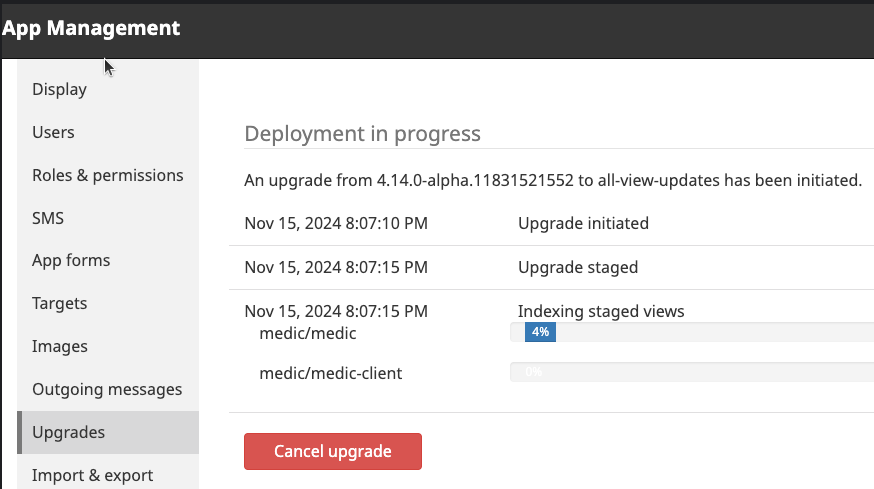

CHT Core admin UI showing upgrade progress bar stalled at 4%

Fix:

Issue #9284: A couchdb restart in single node docker takes down the whole instance. The upgrade will fail and you will see the logs below when you have this issue.

Haproxy reports NOSRV errors:

<150>Jul 25 18:11:03 haproxy[12]: 172.18.0.9,<NOSRV>,503,0,1001,0,GET,/,-,admin,'-',241,-1,-,'-'API logs:

StatusCodeError: 503 - {"error":"503 Service Unavailable","reason":"No server is available to handle this request","server":"haproxy"}nginx reports:

2024/07/25 18:40:28 [error] 43#43: *5757 connect() failed (111: Connection refused) while connecting to upstream, client: 172.18.0.1, Fix: Restart all services

Issue: Couch is crashing during upgrade. The upgrade will fail and you will see the logs below when you have this issue. While there’s two log scenarios, both have the same fix.

CouchDB logs scenario 1:

[error] 2024-11-04T20:42:37.275307Z couchdb@127.0.0.1 <0.29099.2438> -------- rexi_server: from: couchdb@127.0.0.1(<0.3643.2436>) mfa: fabric_rpc:all_docs/3 exit:timeout [{rexi,init_stream,1,[{file,"src/rexi.erl"},{line,265}]},{rexi,stream2,3,[{file,"src/rexi.erl"},{line,205}]},{fabric_rpc,view_cb,2,[{file,"src/fabric_rpc.erl"},{line,462}]},{couch_mrview,finish_fold,2,[{file,"src/couch_mrview.erl"},{line,682}]},{rexi_server,init_p,3,[{file,"src/rexi_server.erl"},{line,140}]}]

[error] 2024-11-04T20:42:37.275303Z couchdb@127.0.0.1 <0.10933.2445> -------- rexi_server: from: couchdb@127.0.0.1(<0.3643.2436>) mfa: fabric_rpc:all_docs/3 exit:timeout [{rexi,init_stream,1,[{file,"src/rexi.erl"},{line,265}]},{rexi,stream2,3,[{file,"src/rexi.erl"},{line,205}]},{fabric_rpc,view_cb,2,[{file,"src/fabric_rpc.erl"},{line,462}]},{couch_mrview,map_fold,3,[{file,"src/couch_mrview.erl"},{line,526}]},{couch_bt_engine,include_reductions,4,[{file,"src/couch_bt_engine.erl"},{line,1074}]},{couch_bt_engine,skip_deleted,4,[{file,"src/couch_bt_engine.erl"},{line,1069}]},{couch_btree,stream_kv_node2,8,[{file,"src/couch_btree.erl"},{line,848}]},{couch_btree,stream_kp_node,8,[{file,"src/couch_btree.erl"},{line,819}]}]

[error] 2024-11-04T20:42:37.275377Z couchdb@127.0.0.1 <0.7374.2434> -------- rexi_server: from: couchdb@127.0.0.1(<0.3643.2436>) mfa: fabric_rpc:all_docs/3 exit:timeout [{rexi,init_stream,1,[{file,"src/rexi.erl"},{line,265}]},{rexi,stream2,3,[{file,"src/rexi.erl"},{line,205}]},{fabric_rpc,view_cb,2,[{file,"src/fabric_rpc.erl"},{line,462}]},{couch_mrview,map_fold,3,[{file,"src/couch_mrview.erl"},{line,526}]},{couch_bt_engine,include_reductions,4,[{file,"src/couch_bt_engine.erl"},{line,1074}]},{couch_bt_engine,skip_deleted,4,[{file,"src/couch_bt_engine.erl"},{line,1069}]},{couch_btree,stream_kv_node2,8,[{file,"src/couch_btree.erl"},{line,848}]},{couch_btree,stream_kp_node,8,[{file,"src/couch_btree.erl"},{line,819}]}]CouchDB logs scenario 2:

[info] 2024-11-04T20:18:46.692239Z couchdb@127.0.0.1 <0.6832.4663> -------- Starting compaction for db "shards/7ffffffe-95555552/medic-user-mikehaya-meta.1690191139" at 10

[info] 2024-11-04T20:19:47.821999Z couchdb@127.0.0.1 <0.7017.4653> -------- Starting compaction for db "shards/7ffffffe-95555552/medic-user-marnyakoa-meta.1690202463" at 21

[info] 2024-11-04T20:21:24.529822Z couchdb@127.0.0.1 <0.24125.4661> -------- Starting compaction for db "shards/7ffffffe-95555552/medic-user-lilian_lubanga-meta.1690115504" at 15Fix: Give CouchDB more disk and Restart all services

* See eCHIS Kenya Issue #2578 - a private repo and not available to the public

Issue: A number of pods were stuck in indeterminate state, presumably because of failed garbage collection

API Logs:

2024-11-04 19:33:56 ERROR: Server error: StatusCodeError: 500 - {"message":"Error: Can't upgrade right now.

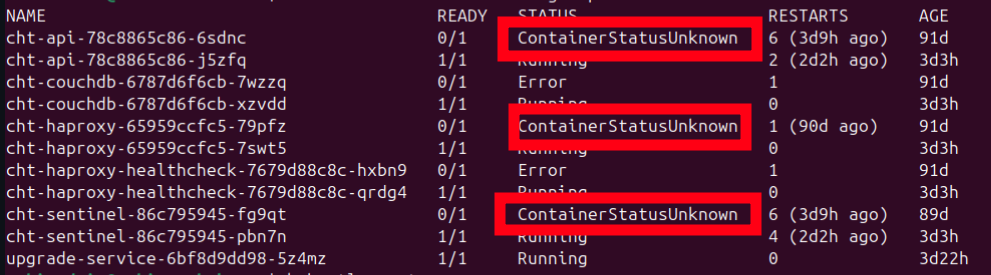

The following pods are not ready...."}Running kubectl get po shows 3 pods with status of ContainerStatusUnknown:

CLI screenshot showing 3 pods with STATUS of ContainerStatusUnknown

Fix: delete pods so they get recreated and start cleanly

kubectl delete po 'cht.service in (api, sentinel, haproxy, couchdb)'* See eCHIS Kenya Issue #2579 - a private repo and not available to the public

Issue: When a VM has been upgraded but the CHT Upgrade Service is out of date, an error can occur.

CHT Upgrade Service Logs:

Error response from daemon: client version 1.41 is too old. Minimum supported API version is 1.44, please upgrade your client to a newer version

Error while starting containers Error: Error response from daemon: client version 1.41 is too old. Minimum supported API version is 1.44, please upgrade your client to a newer version

at ChildProcess.<anonymous> (/app/src/docker-compose-cli.js:35:25)

at ChildProcess.emit (node:events:513:28)

at Process.ChildProcess._handle.onexit (node:internal/child_process:293:12)Fix: Download the latest version of the upgrade service, restart the upgrade service and retry the upgrade:

docker pull public.ecr.aws/s5s3h4s7/cht-upgrade-service:latest

docker compose up -d # reloads services with updated images Issue: When a VM has been upgraded but the CHT Upgrade Service is out of date, an error can occur.

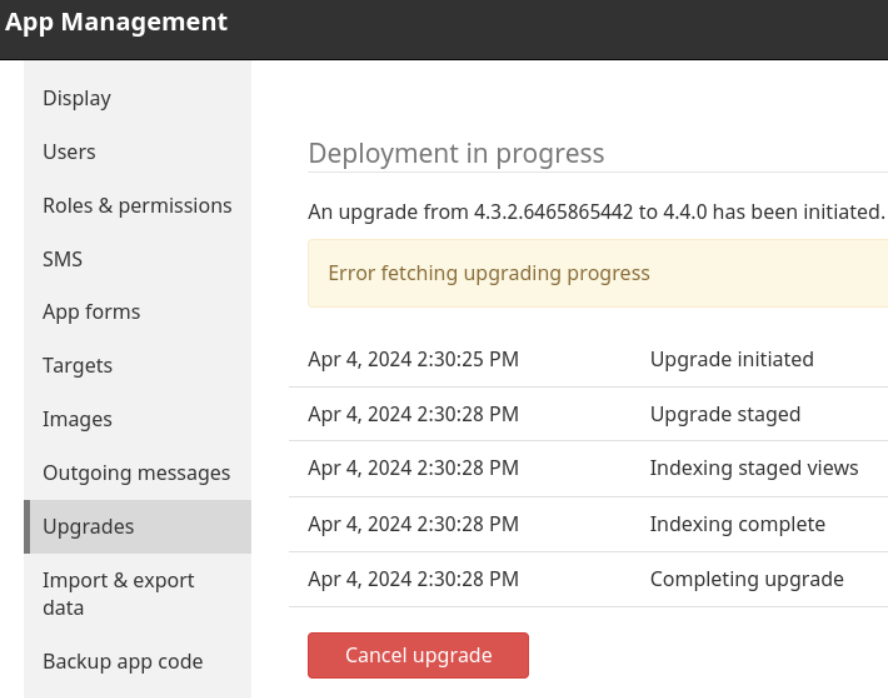

CHT Core admin UI showing the error Error fetching upgrade progress

There’s multiple reasons Error fetching upgrade progress to occur, so verify in CHT Upgrade Service logs show:

2024-04-04T19:30:44.955097611Z ERROR: for healthcheck 'ContainerConfig'

2024-04-04T19:30:44.955101343Z

2024-04-04T19:30:44.955105243Z ERROR: for haproxy 'ContainerConfig'

2024-04-04T19:30:44.955109269Z Traceback (most recent call last):

2024-04-04T19:30:44.955112702Z File "/usr/bin/docker-compose", line 33, in <module>

2024-04-04T19:30:44.955116901Z sys.exit(load_entry_point('docker-compose==1.29.2', 'console_scripts', 'docker-compose')())Fix: Download the latest version of the upgrade service, restart the upgrade service and retry the upgrade:

docker pull public.ecr.aws/s5s3h4s7/cht-upgrade-service:latest

docker compose up -d # reloads services with updated images Guides for migrating CHT applications